<English cross post with my DCP blog>

A recent USA Congressional survey stated that power companies are targeted by cyber attacks 10.000x per month.

After the 2010 discovery of the Stuxnet virus the North American Electric Reliability Corporation (NERC) established both mandatory standards and voluntary measures to protect against such cyber attacks, but most utility providers haven’t implemented NERC’s voluntary recommendations.

Stuxnet hit the (IT) newspaper front-pages around September 2010, when Symantec announced the discovery. It represented one of the most advanced and sophisticated viruses ever found. One that targeted specific PLC devices in nuclear facilities in Iran:

Stuxnet is a threat that was primarily written to target an industrial control system or set of similar systems. Industrial control systems are used in gas pipelines and power plants. Its final goal is to reprogram industrial control systems (ICS) by modifying code on programmable logic controllers (PLCs) to make them work in a manner the attacker intended and to hide those changes from the operator of the equipment.

DatacenterKnowledge picked up on it in 2011, asking ‘is your datacenter ready for stuxnet?’

After this article the datacenter industry didn’t seem to worry much about the subject. Most of us deemed the chance of being hacked with a highly sophisticated virus ,attacking our specific PLC’s or facility controls, very low.

Recently security company Cylance published the results of a successful hack attempt on a BMS system located at a Google office building. This successful hack attempt shows a far greater threat for our datacenter control systems.

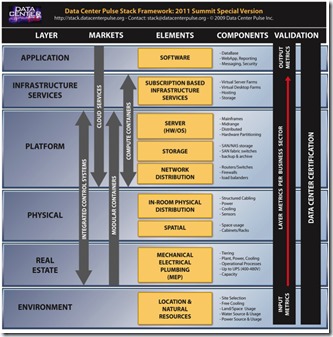

The road towards TCP/IP

The last few years the world of BMS & SCADA systems radically changed. The old (legacy) systems consisted of vendor specific protocols, specific hardware and separate networks. Modern day SCADA networks consist of normal PC’s and servers that communicate through IT standard protocols like IP, and share networks with normal IT services.

IT standards have also invaded facility equipment: The modern day UPS and CRAC is by default equipped with an onboard webserver able of send warning using an other IT standard: SNMP.

The move towards IT standards and TCP/IP networks has provided us with many advantages:

- Convenience: you are now able to manage your facility systems with your iPad or just a web browser. You can even enable remote access using Internet for your maintenance provider. Just connect the system to your Internet service provider, network or Wi-Fi and you are all set. You don’t even need to have the IT guys involved…

- Optimize: you are now able to do cross-system data collection so you can monitor and optimize your systems. Preferably in an integrated way so you can have a birds-eye view of the status of your complete datacenter and automate the interaction between systems.

Many of us end-users have pushed the facility equipment vendors towards this IT enabled world and this has blurred the boundary between IT networks and BMS/SCADA networks.

In the past the complexity of protocols like Bacnet and Modbus, that tie everything together, scared most hackers away. We all relied on ‘security through obscurity’ , but modern SCADA networks no longer provide this (false) sense of security.

Moving towards modern SCADA.

The transition towards modern SCADA networks and systems is approached in many different ways. Some vendors implemented embedded Linux systems on facility equipment. Others consolidate and connected legacy systems & networks on standard Windows or Linux servers acting as gateways.

This transition has not been easy for most BMS and SCADA vendors. A quick round among my datacenter peers provides the following stories:

- BMS vendors installing old OS’s (Windos/Linux) versions because the BMS application doesn’t support the updated ones.

- BMS vendors advising against OS updates (security, bug fix or end-of-support) because it will break their BMS application.

- BMS vendors unable to provide details on what ports to enable on firewalls; ‘ just open all ports and it will work’.

- Facility equipment vendors without software update policies.

- Facility equipment vendors without bug fix deployment mechanisms; having to update dozens of facility systems manually.

And these stories all apply to modern day, currently used, BMS&SCADA systems.

Vulnerability patching.

Older versions of the SNMP protocol have known several vulnerabilities that affected almost every platform, included Windows/Linux/Unix/VMS, that supported the SNMP implementation.

It’s not uncommon to find these old SNMP implementations still operational in facility equipment. With the lack of software update policies, that also include the underlying (embedded) OS, new security vulnerabilities will also be neglected by most vendors.

The OS implementation from most BMS vendors also isn’t hardened against cyber attacks. Default ports are left open, default accounts are still enabled.

This is all great news for most hackers. It’s much easer for them to attack a standard OS like a Windows or Linux server. There are lots of tools available to make the life of the hacker easer and he doesn’t have to learn complex protocols like Modbus or Bacnet. This is by far the best attack surface in modern day facility system environments.

The introduction of DCIM software will move us even more from the legacy SCADA towards an integrated & IT enabled datacenter facility world. You will definitely want to have your ‘birds-eye DCIM view’ of your datacenter anywhere you go, so it will need to be accessible and connected. All DCIM solutions run on mainstream OS’s, and most of them come with IT industry standard databases. Those configurations provide an other excellent attack surface, if not managed properly.

ISO 27001

Some might say: ‘I’m fully covered because I got an ISO 27001 certificate’.

The scope of ISO27001 audit and certificate is set by the organization pursuing the certification. For most datacenter facilities the scope is limited to the physical security (like access control, CCTV) and its processes and procedures. IT systems and IT security measures are excluded because those are part of the IT domain and not facilities. So don’t assume that BMS and SCADA systems are included in most ISO 27001 certified datacenter installations.

Natural evolution

Most of the security and management issues are a normal part of the transition in to a larger scale, connected IT world for facility systems.

The same lack of awareness on security, patching, managing and hardening of systems has been seen by the IT industry 10-15 year ago. The move from a central mainframe world to decentralized servers and networks, combined with the introduction of the Internet has forced IT administrators to focus on managing the security of their systems.

In the past I have heard Facility departments complain that IT guys should involve them more because IT didn’t understand power and cooling. With the introduction of a more software enabled datacenter the Facility guys now need to do the same and get IT more involved; they have dealt with all of this before…

Examples of what to do:

- Separate your systems and divide the network. Your facility system should not share its network with other (office) IT services. The separate networks can be connected using firewalls or other gateways to enable information exchange.

- Assess your real needs: not everything needs to be connected to the Internet. If facility systems can’t be hardened by the vendor or your own IT department, then don’t connect them to the Internet. Use firewalls and Intrusion Detection Systems (IDS) to secure your system if you do connect them to the Internet.

- Involve your IT security staff. Have facilities and IT work together on implementing and maintaining your BMS/SCADA/DCIM systems.

- Create awareness by urging your facility equipment vendor or DCIM vendor to provide a software update & security policy.

- Include the facility-systems in the ISO 27001 scope for policies and certification.

- Make arrangements with your BMS and/or DCIM vendor about management of the underlying OS and its management. Preferably this is handled by your internal IT guys who already should know everything about patching IT systems and hardening them. If the vendor provides you with an appliance, then the vendor needs to manage the patching process and hardening of the system.

If you would like to talk about the future of securing datacenter BMS/SCADA/DCIM systems than join me at Observe Hack Make (OHM) 2013. IOHM is a five-day outdoor international camping festival for hackers and makers, and those with an inquisitive mind. Starts July 31st 2013.

Note:

There are really good whitepapers on IDS systems (and firewalls) for securing Modbus and Bacnet protocols, if you do need to connect those networks to the internet. Example: Snort IDS for SCADA (pdf) or books about SCADA & security at Amazon.

Source:

A large part of this blog is based on a Dutch article on BMS/SCADA security January 2012 by Jan Wiersma & Jeroen Aijtink (CISSP). The Dutch IT Security Association (PViB) nominated this article for ‘best security article of 2012’.