Lecture: Operate unreliable IS in a reliable way

I recently started a lecture series at the Vrije University of Amsterdam (VU). As part of this I did a lecture on how to operate unreliable Information Systems in a reliable way – or: Everything breaks, All the time.

Synopsis:

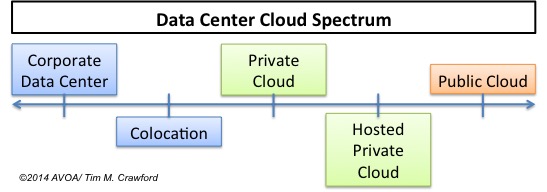

Behind the clouds of cloud computing! How can we reliably operate systems that are inherently unreliable?

What if for some hours, we do not have access to the services such as navigators, routers, and other communication technologies? It seems our life will be at stake if major digital services fail! Many promises of digital technologies, from big-data to the Internet of the things and many others are based on reliable infrastructures such as cloud computing. What if these critical infrastructures fail? Do they by the way fail? How the responsible companies and organizations manage these infrastructures in a reliable way? And what are the implications of all this for companies who want to base their business on such services?

As part of the lecture we explored modern complex systems and how we got there, using examples from Google and Amazon’s journey and how it relates to modern enterprise IT. We used the material of Mark Burgess to explore how to prevent systems from spiralling out of control. We closed off looking at knowledge management based on the ‘blameless retrospective’ principles and how feedback cycles from other domains are helping to create more reliable IT.

Relevant links supporting the lecture :

- Barraso, Clidaras, Holzle: The Datacenter as a Computer. 2009/2013

- Mark Burgess: In Search of Certainty. 2013

- Woods DD. STELLA: Report from the SNAFUcatchers Workshop on Coping With Complexity. 2017.

- Wiersma: Applying firefighter tactics to (IT) leadership. 2016

The used presentation can be found here: VU lecture

Recording of the session is available within the VU.

VU Assistant Professor Mohammad Mehrizi posted a nice lecture review on LinkedIn, including a picture with some of the attending students.